Friday, October 23, 2009

Engineering

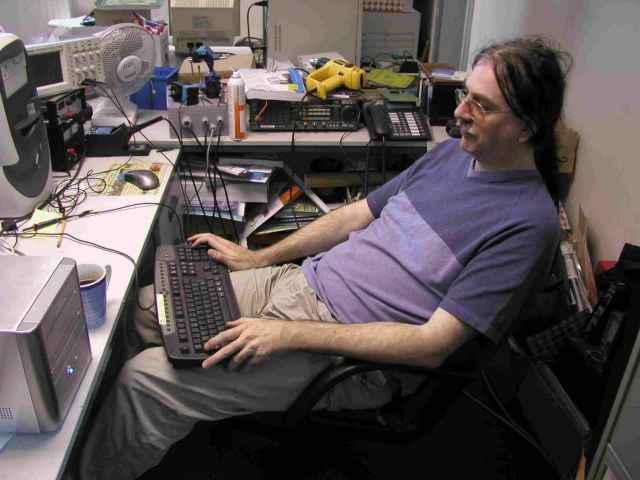

So, after much rummaging about I have got this bastard long-running project to a state where it's worth taking it to the customer and plugging it into their mass spectrometer hardware, where it's basically going to be responsible for controlling the timing, driving various electrostatic lenses and acquiring data.

The design has an FPGA containing a reduced version of the R3220 (my custom 32-bit RISC processor) and about a dozen custom peripherals, it's running a fairly complicated embedded application (written entirely in assembler) consisting of 33 source files and the damned thing is responsible for some fairly hairy real-time data acquisition in a noisy environment.

As well as the device itself there are various development tools that have been written to support the design, all in all some tens of thousands of lines of code. All of which had to work and none of which had actually been tested on the machine.

And to cut to the chase, after we'd set some parameters (there are thirty-odd interface registers to play with up) and I'd restored a couple of lines that had been accidentally edited to death, it was controlling the hardware and we were looking at mass peaks... In other words, nearly everything worked first time, and the bit that didn't just required a few seconds of editing to fix.

The thing is, a programmer would regard writing so much code, and in assembler, and having it work virtually straight away as success beyond their wildest dreams, if not as being completely impossible. Me? I'm actually slightly pissed off - if I hadn't made a tired mistake tidying up a file (unnecessarily, at that) the bloody thing would have been right first time... As usual. Bugger!

Still, given that the processor was designed in only ten days and it's lovely to use, is kicking the shit out of a Nios2 performance wise and has behaved perfectly I suppose I'm allowed a small cackle of victory.

Mu-haha! MU-ha-ha-ha-oh... Sorry. Bit carried away there.

[Update on the "kicking the shit out of a Nios2" comment.

The R3220 (clocked at 30MHz) is handling more data in 5uS than the Nios2 (clocked at 70MHz) managed to handle in 80uS, so as far as the application goes the R3220 is a factor of 36 times faster, or so.

Much of this performance margin is down to the efficiency of the code they are running, of course, thought it should be noted that the Nios2 was running highly optimised C, code that was written and tweaked over a period of months. The R3220 is running hand assembler written over a period of hours and not optimised at all - there was no need.

The Nios2 system also had a lot of hardware support for functions that the R3220 system just does in software, because it can, so the performance factor is arguably higher even than that...]

Tuesday, October 20, 2009

CO2

Just watched an advert demonising CO2. Far too little and far too late.

Wonder if any of the stupid ignorant bastards who've been doing their best to doom us all by opposing nuclear power will finally wake up and admit their culpability?

No, don't be stupid crem. They're probably all gearing up to oppose nuclear fusion...

Thursday, October 08, 2009

Great Myths of the IT Industry

- Abstraction is invariably a good thing

- Efficiency doesn't matter

- You can be a competent programmer without understanding hardware, low-level programming, logic, anything much

- Bugs are inevitable

Wednesday, October 07, 2009

Alli

Alli is a new over-the-counter drug that binds to the fat in your diet and prevents the body from absorbing some of it, so that people can carry on stuffing themselves and still lose weight.

In a world where people starve to death it strikes me as almost obscene to market a drug that encourages the rich to eat too much and then shit fat...

Thursday, October 01, 2009

Even more gloom

You know, I wouldn't have thought it possible for today to be worse than yesterday, work-wise.

But it managed.

We have a reasonably large order for a new project, for which I have already designed the hardware, and so I just need to write enough of the software to be sure that the hardware works so we can safely order the PCB's, etc, for manufacture. Though actually I suspect that once started I'd actually write the whole thing so that we can provide an early sample for the customer to evaluate.

So, I duly set up the project and start to write code. Or at least I would, except that for some unknown reason the thrice-damned compiler for one of the processors involved (a Jennic RF device) has stopped working. Yes, the software has rotted. Or, to be more accurate, something I've installed (some other version of gcc, probably) since last time I used it (a few months ago) has messed with the path, or the environment, or whatever, and killed it.

Not that it gives a helpful message, you understand, no, the only indication is an essentially meaningless error from the make process, emitted from one or other of the (slightly incompatible) versions of the fucking make utility I have to have on this system. So once more, if I can work up the energy, I'm going to have to delve into makefile hell, find out what the bastard thing is moaning about, why it has changed, and what to do to fix it. And this, no doubt, will fuck with something else on the system and make that stop working with some obscure problem I will trip over later...

Oh, gods, how I detest relying on other people's development tools. I'd say most of 'em were designed by arseholes, but the truth is that most of 'em weren't designed at all and are just the product of generations of open-sore bodging.

So, what to do? There's something to be said for the idea of using virtual machines to isolate each and every piece of third party garbage out there, but that's a real pain in the arse for people like me who create systems which involve multiple pieces of hardware/software using different CPUs, languages and so on and so forth...

[sigh] I guess I'm going to have to spend some time studying the more arcane details of make utilities and makefiles so I can debug this mess. It's a study I've been putting this off for quite some time. Far too many makefiles are childishly overcomplicated and seemingly designed by immature programmers who admire complex solutions to simple problems - baroque stupidity for the terminally anally retarded.

Christ, I sometimes wonder what it is about so many programmers that makes them incapable of grasping the fact that simple solutions are to be prefererred to complex ones, but such wondering's clearly a waste of time. The answer is, as it so often is, merely stupidity.

Grump, snarl.

[later]

After a lot of farting about I sort of cured the makefile problem... What I don't understand is what brought it on. The cure was to replace a couple of absolute paths with relative paths.

Like this:

Before:

BASE_DIR = c:\foo\bah\sdk

After:

BASE_DIR = ..\..\foo\bah\sdk

Now, the thing I don't understand is what changed on the machine to stop the first version working. The paths haven't changed, the relative paths just refer to the same directories as the static paths did. The static path makefiles still work on other machines, they used to work on this one, FFS, and without anything changing now they just don't. There are times when I hate computers...

Morose-looking sheep

"Research by scientists at the Free University in Berlin has shown a link between depressed farmers and vets and the infectious Borna virus which makes cows, sheep and horses behave weirdly. One good method of cheering yourself up during a depressive bout can now be to search your memory for contact with morose-looking sheep and blame it on them".

Try as I might I can't remember close contact with any sheep, morose or otherwise... Though I have shouted "mint sauce!" at them in the distance on occasion, so I suppose it could be delayed payback.

Bastards.

Subscribe to:

Posts (Atom)